TL;DR:

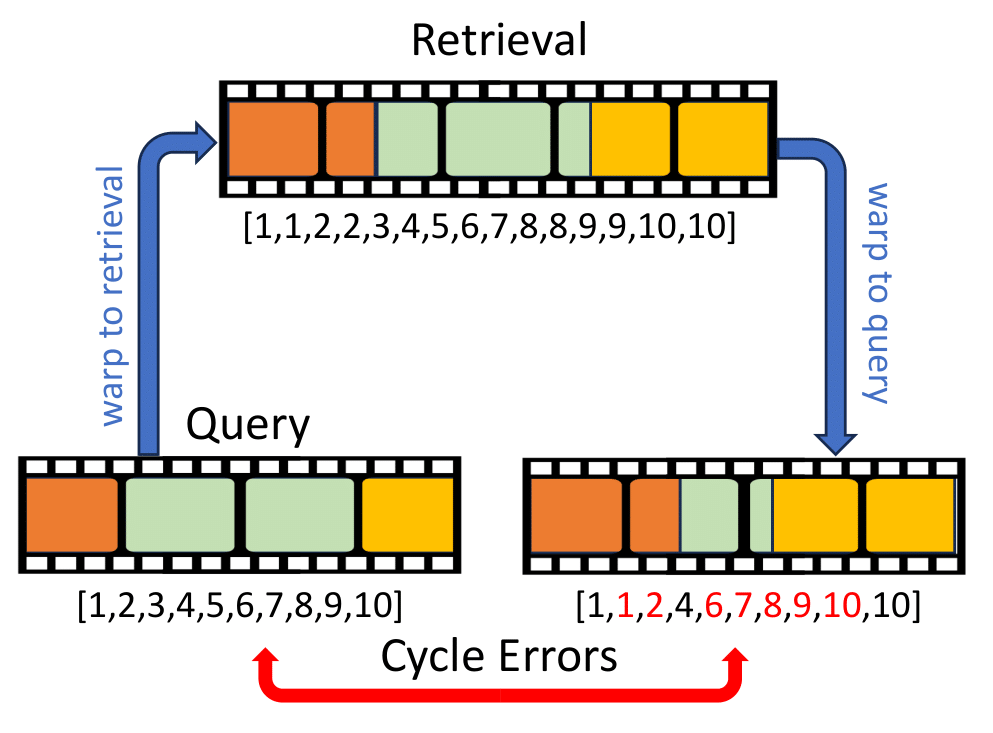

Regular Temporal Alignment: A pair of videos from the same action class is given. The goal is to align them, i.e., match their key-event frames. |

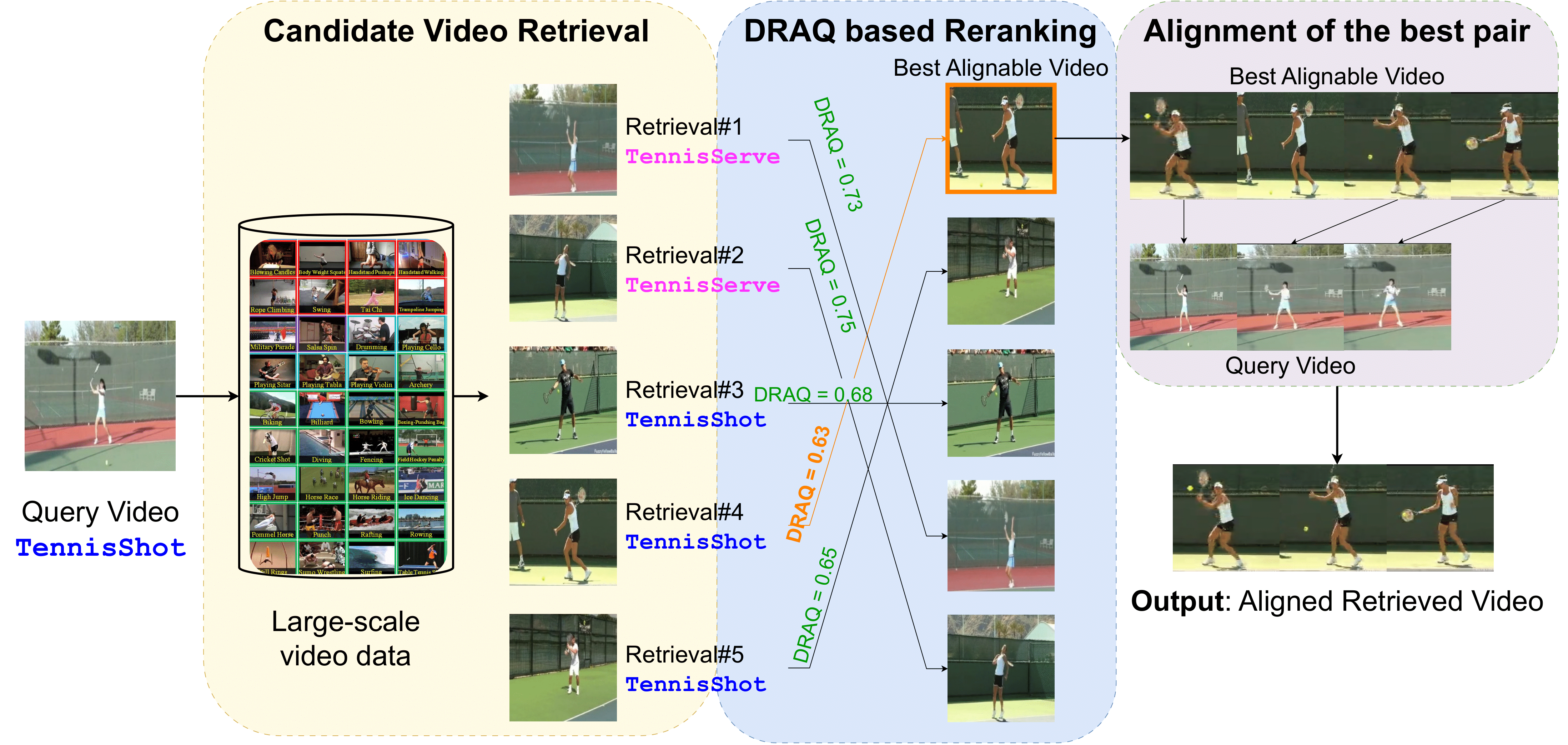

Proposed Alignable Video Retrieval (AVR): Given a query video, the goal is to find the best alignable video from candidate videos of the video search results. |

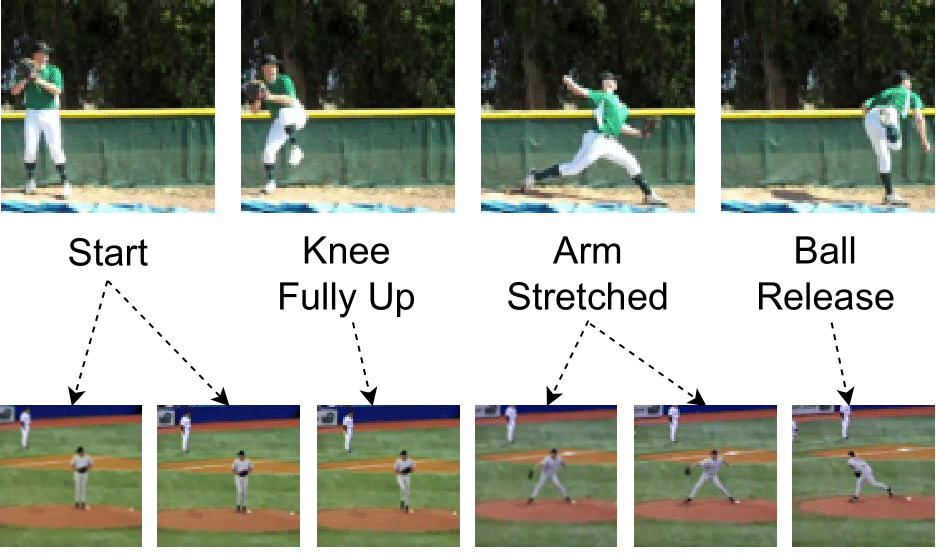

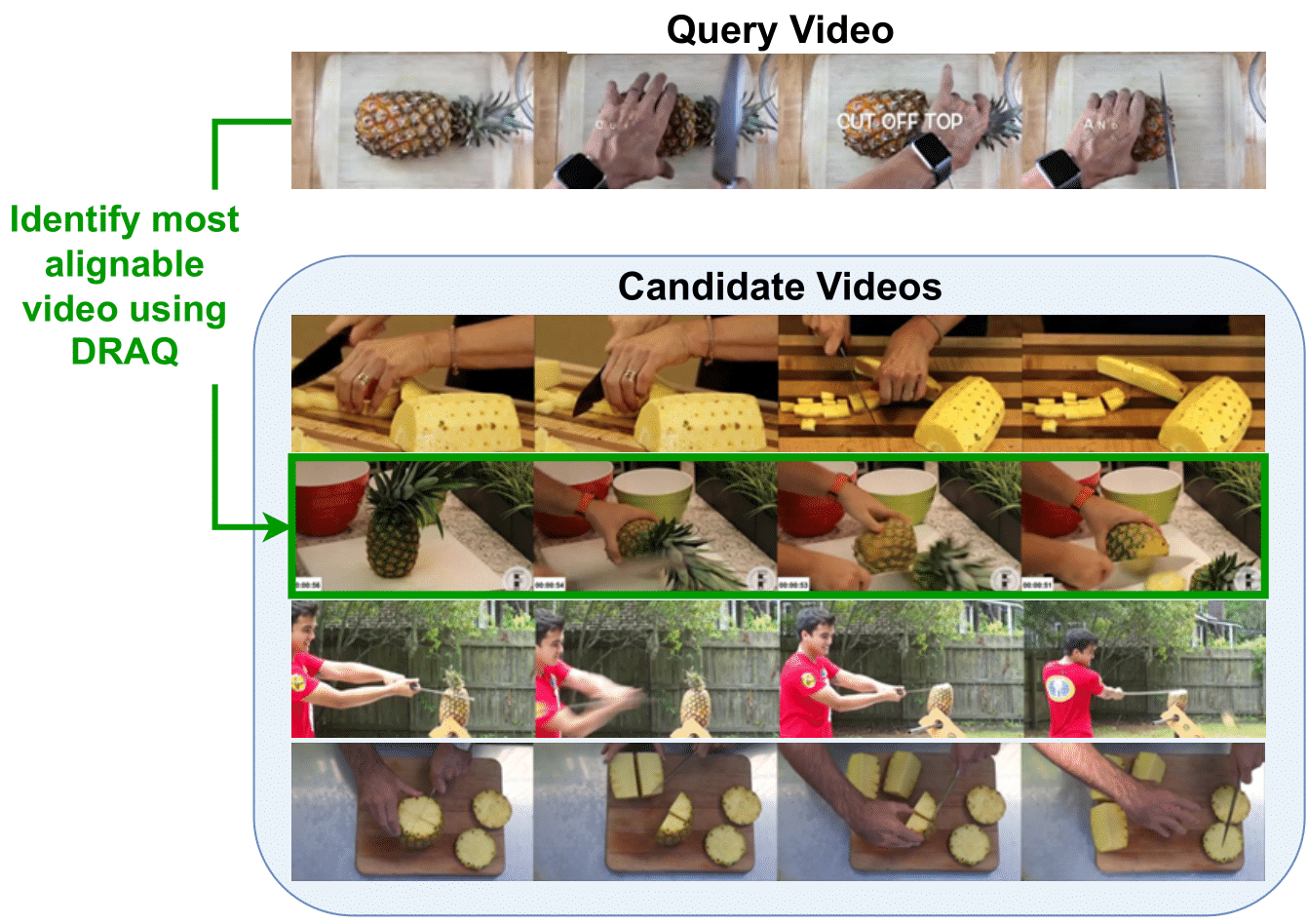

Alignable Video Retrieval. While some actions, like "baseball swing" (left), permit temporal alignment in virtually all cases due to their fixed sequence of action phases, general videos from other action classes, like "cutting pineapple" (right), exhibit much more variability. Knowledge of the action category alone is insufficient to identify alignable pairs for these cases, and a deeper temporal understanding of the videos is required to identify alignable videos. We propose DRAQ, an alignability score that can reliably identify the alignable video pair (red) among the set of candidates.