Low-Level Temporal Correspondence based Downstream Tasks

Video Object Segmentation (VOS)

We follow the semi-supervised protocol of DAVIS-2017 [43], where the object masks of the first frame of a video are given, and the task is to predict the masks in the rest of the frames. No layers are tuned on this task. frame.

| Pretraining | J&F-Mean | J-Mean | F-Mean |

|---|---|---|---|

| ST-MAE | 53.5 | 52.6 | 54.4 |

| VideoMAE | 53.8 | 53.2 | 54.4 |

| MotionMAE | 56.8 | 55.8 | 57.8 |

| SVT | 48.5 | 46.8 | 50.1 |

| Ours (ViT-B) | 62.1 | 60.5 | 63.6 |

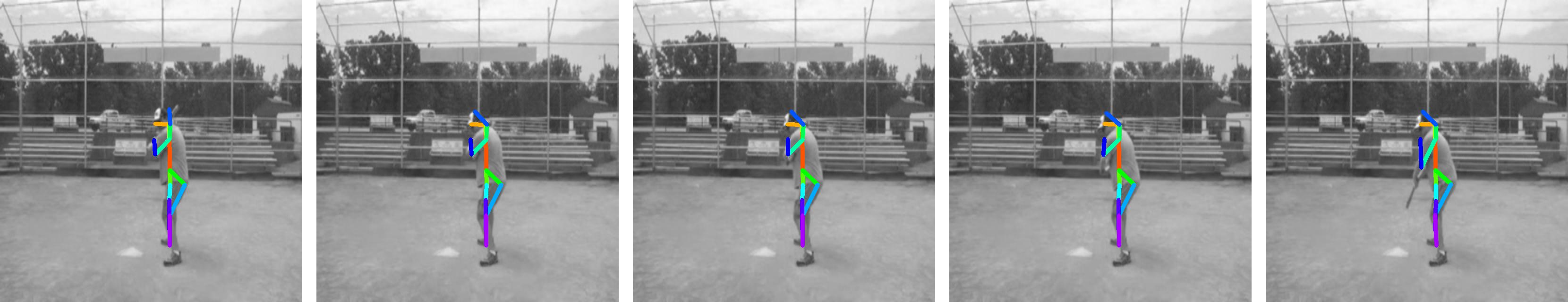

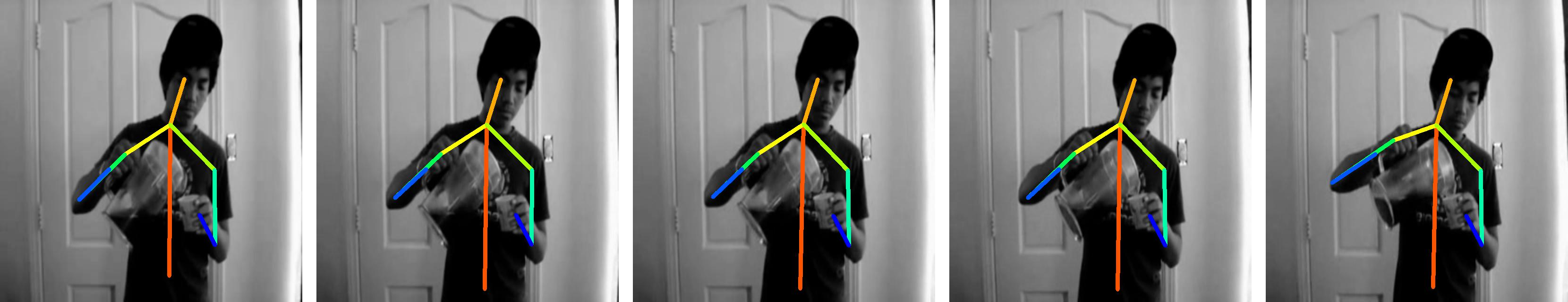

Human Pose Propagation

In this protocol, the key points of the human pose are given for the first frame, and the task is to predict the location of those key points in the subsequent frames. We employ pre-trained video SSL model without any further tuning.

High-level Semantics based Downstream Tasks

Video-to-Video Retrieval

We perform video retrieval experiments to demonstrate the suitability of our features for semantic video similarity search, no finetuning is allowed. Following prior works [9, 13, 21], the test set of each dataset is used as a query-set, and the training set is considered as a search-set. We report Top-1 and Top-5 retrieval accuracy. frame.

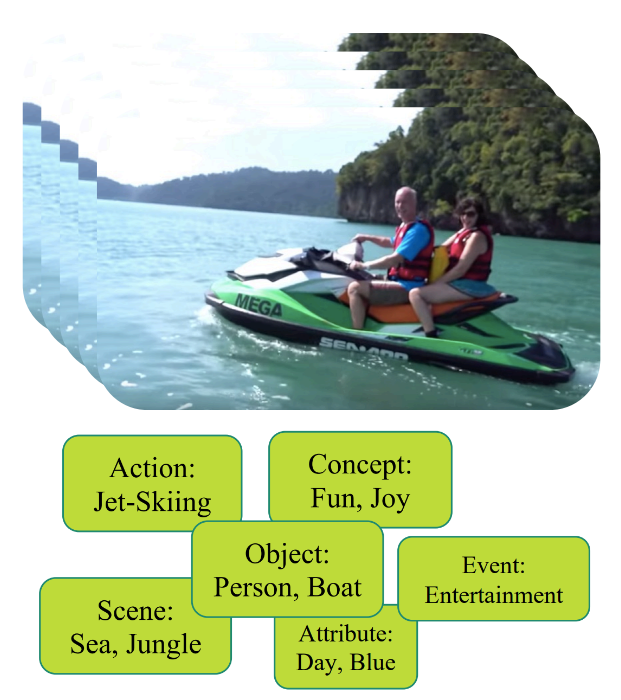

Multi-label Video Attributes Recognition

Holistic Video Understanding (HVU) [12] is a large-scale benchmark addressing multi-label and multi-task video understanding of multiple semantic aspects, including scenes, objects, actions, attributes, and concepts. We train different linear classifiers over frozen features and report results in mean average precision (mAP).

Example from HVU dataset.

| Method | Action | Obj. | Scene | Event | Attr. | Concept | Overall |

|---|---|---|---|---|---|---|---|

| SVT | 38.48 | 30.35 | 30.97 | 37.87 | 28.20 | 35.64 | 33.58 |

| ρBYOL | 33.20 | 25.82 | 28.40 | 35.50 | 24.16 | 33.21 | 30.05 |

| VideoMAE | 27.49 | 23.36 | 24.56 | 29.78 | 21.04 | 28.75 | 25.83 |

| Ours (ViT-B) | 38.65 | 33.46 | 34.24 | 40.23 | 30.99 | 38.38 | 35.99 |

BibTeX

@inproceedings{dave2024nomore,

title={No More Shortcuts: Realizing the Potential of Temporal Self-Supervision},

author={Dave, Ishan and Jenni, Simon and Shah, Mubarak},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence},

year={2024}

}